Goal: To determine how well users can track moving objects in VR based on different modalities of sensory perceptual information (auditory vs visual vs audio-visual)

In this work, we examined the influence of sensory modality and visual feedback on the accuracy of head-gaze-based dynamic target tracking in virtual reality. Toward this end, we conducted a between-subjects study wherein participants would have to track a bike rider based on either visual, auditory, or audio-visual information. Each participant performed two blocks of experimental trials, with a calibration phase in between. In the calibration phase users were given visual feedback of their head-gaze direction in the form of a yellow cursor. We go on to discuss how head-tracking performance was affected by the different types of perceptual information afforded.

In this work, we examined the influence of sensory modality and visual feedback on the accuracy of head-gaze-based dynamic target tracking in virtual reality. Toward this end, we conducted a between-subjects study wherein participants would have to track a bike rider based on either visual, auditory, or audio-visual information. Each participant performed two blocks of experimental trials, with a calibration phase in between. In the calibration phase users were given visual feedback of their head-gaze direction in the form of a yellow cursor. We go on to discuss how head-tracking performance was affected by the different types of perceptual information afforded.

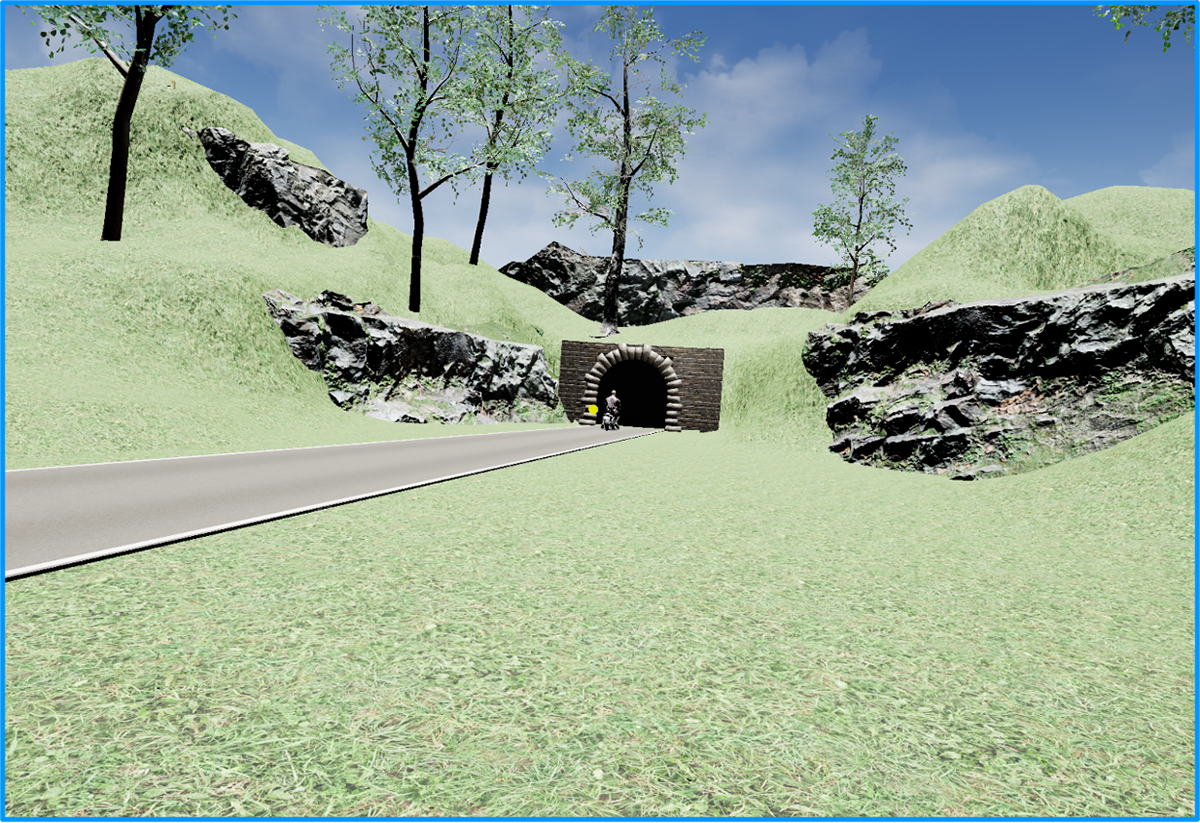

Rider appears from tunnel on the left

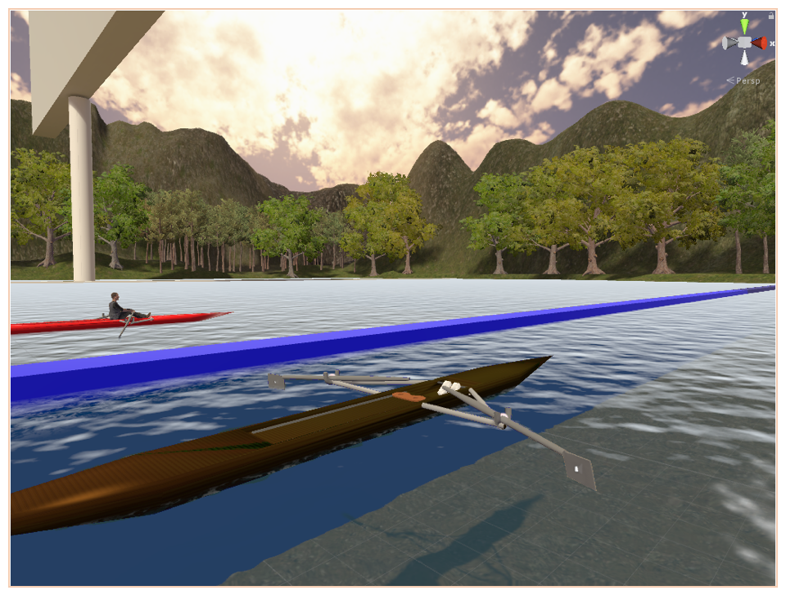

Rider mid-way through journey